A Databricks SQL Endpoint is a compute cluster, quite similar to the cluster we have known in the Databricks that allows you execute SQL commands on data objects within the Databricks environment. The Databricks allows you to connect using various tools such as DBT, connect to Notebook using Azure Data Factory, etc. But there is no direct method to connect Databricks SQL endpoint warehouse. In this article, we will check how to connect to Databricks SQL endpoint from Azure Data Factory (ADF).

Connect to Databricks SQL Endpoint from Azure Data Factory

As of now, Azure Data Factory (ADF) does not provide any component that directly connects to the Databricks SQL endpoint cluster. However, using “Azure Databricks” connector in the “Data Flow“, you can connect to Databricks Notebook and execute pre-defined queries.

The good news is that Databricks provides support ODBC connection and Azure Data Factory contains ODBC connector. We can use the “ODBC” connector to connect Databricks SQL Endpoint warehouse and execute any query.

Connect to Databricks SQL Endpoint from Azure Data Factory ODBC Connector

Following steps allows you to establish connection from Azure Data Factory to Databricks SQL endpoint.

- ODBC Integration Runtime (IR) Setup

- Download and Install Databricks ODBC Driver

- Get Connection Details from Databricks SQL Endpoint

- Create Azure Data Factory (ADF) ODBC Linked Services to Databricks SQL Endpoint

- Create Azure Data Factory (ADF) Source Data Sets

Now, let us check how to use the ADF ODBC connector to connect Databricks SQL warehouse.

ODBC Integration Runtime (IR) Setup

Use the following steps to create an ODBC linked service to Databricks SQL Endpoint in the Azure portal UI.

- Browse to the Manage tab in your Azure Data Factory and select Linked Services, then click New:

- Search for ODBC and select the ODBC connector.

- Choose to connect via integration runtime (IR)

- You need to create your own integration runtime to install Databricks ODBC drivers. Select “New” from drop a down to create new integration runtime.

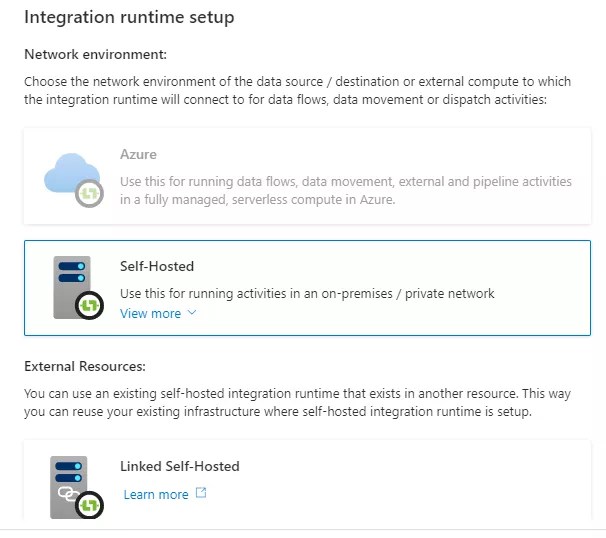

- Select “Self-hosted” option in Integration runtime setup. And click on create. It will take you to integration runtime setting.

- Choose Option 2: Manual Setup

- Download and install the integration runtime on your on-premises or private network machine. Copy authentication key1 and key2. You can use key in step to register integration runtime.

- Once installation is completed, you can enter the authentication key that you copied and click on Register.

- Follow the on-screen instructions and once registered. Your local machine will be connected to ADF via integration runtime.

Download and Install Databricks ODBC Driver

Download and install Databricks ODBC driver onto the machine where integration runtime (IR) is running. You can download latest ODBC driver from following link.

ODBC Driver link: https://www.databricks.com/spark/odbc-drivers-download

Get Connection Details from Databricks SQL Endpoint

Now, login to Databricks workspace and copy the required information such as Personal Access Token, host url, HTTPPath, etc.

- Generate Databricks User Token

You can generate new user taken at user -> User Settings -> Personal Access Token screen.

- Get JDBC/ODBC Connection Details

Go to SQL Warehouses -> SQL Warehouse Name -> Connection details and copy required details.

- Databricks ODBC Connection String

The following ODBC connection string can be used to connect to Databricks SQL endpoint from Azure Data Factory (ADF):

Driver=<odbc driver>;Host=<host url>;Port=443;HTTPPath=<httpPath>;ThriftTransport=2;SSL=1;AuthMech=3;UID=token;PWD=<personal access token>Create ADF Linked Services to Databricks SQL Endpoint

Once integration runtime is up and running, ODBC driver is installed and you have ODBC connection details, you can create an ODBC linked service to query Databricks SQL Endpoint.

- Provide a meaningful Name

- Select an Integration Runtime (IR) that you created earlier

- Provide an ODBC connection string by replacing values that you copied from Databricks SQL warehouse.

Driver=<odbc driver>;Host=<host url>;Port=443;HTTPPath=<httpPath>;ThriftTransport=2;SSL=1;AuthMech=3;UID=token;PWD=<personal access token>- Select Authentication type as anonymous, test connection and click on create

Create Azure Data Factory (ADF) Source Data Sets

Following steps allows you to create an ODBC source data set.

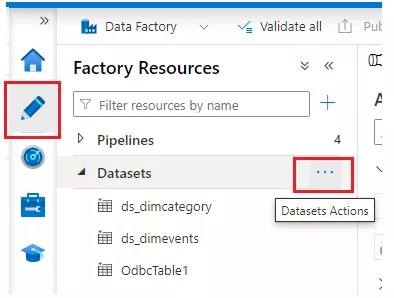

- Browse to the Author tab in your Azure Data Factory.

- Choose a create New dataset from Datasets Actions. A new Dataset window appears;

- Search and select ODBC. Click on Continue.

- In the set property window, provide a meaningful Name and ODBC Linked Service. Choose a Databricks Table name and Click on Ok button.

You can now use this Data set anywhere in your Azure Data Factory.

Related Articles,

- How to Access Azure Blob Storage Files from Databricks?

- How to Connect to Snowflake from Databricks?

Hope this helps 🙂